Add option to exclude first moment bias-correction in Adam/Adamw/other Adam variants. · Issue #67105 · pytorch/pytorch · GitHub

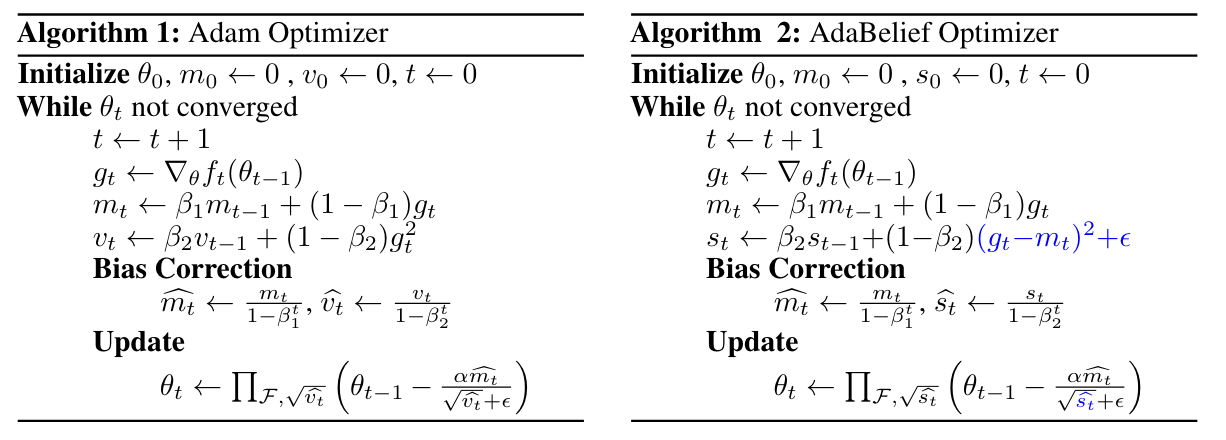

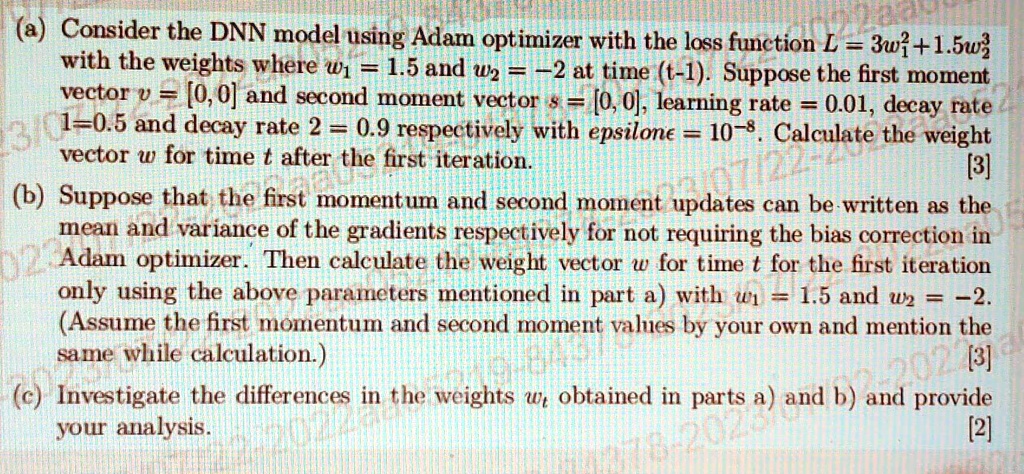

SOLVED: Texts: (a) Consider the DNN model using Adam optimizer with the loss function L = 3w + 1.5w, where the weights are w1 = 1.5 and w2 at time t-1. Suppose

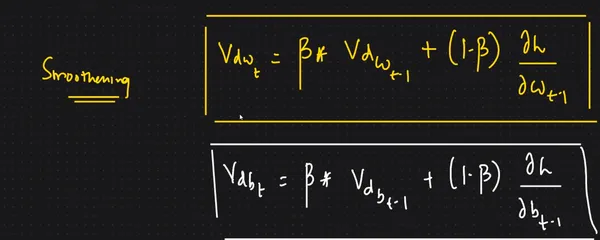

RNN / LSTM with modified Adam optimizer in deep learning approach for automobile spare parts demand forecasting | Multimedia Tools and Applications

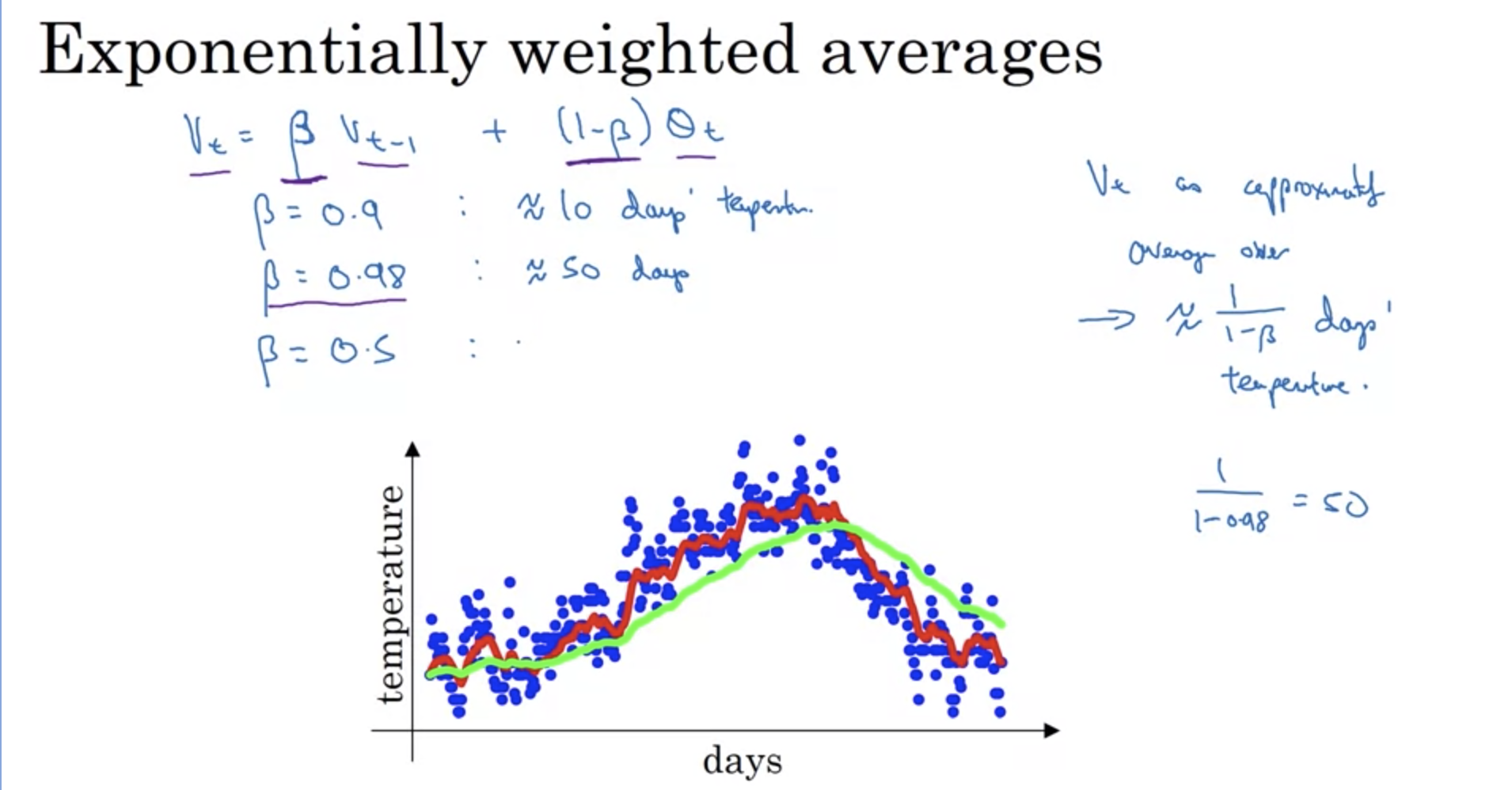

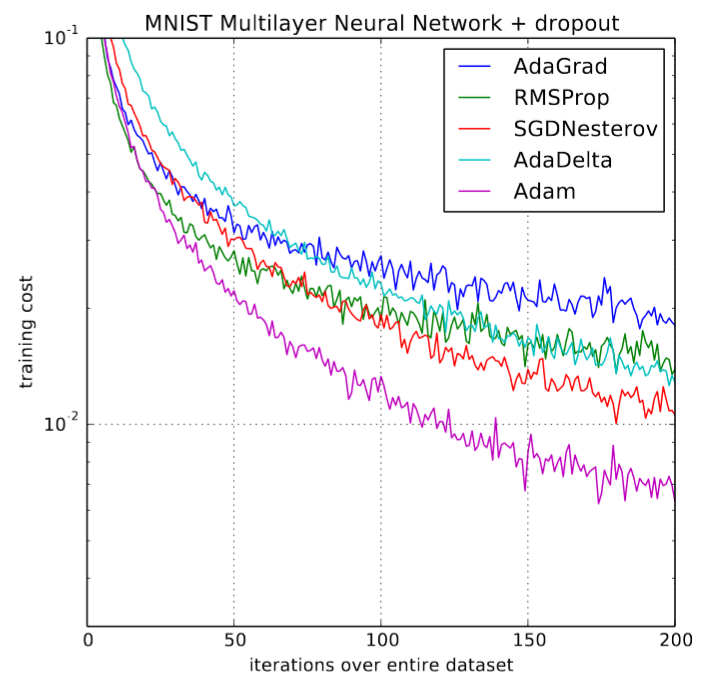

Gentle Introduction to the Adam Optimization Algorithm for Deep Learning - MachineLearningMastery.com